Tuesday, December 21, 2010

Tuesday, November 23, 2010

Dropbox and shared folders

- Say you shared a folder with friends to compile together a bunch of pictures. What if, when all contributed to put pictures, you want to leave the share and start to do some removal and editing without bother the others ?

- What if you want to notify all the contributors about something specific to this shared folder ?

"I think I have some solutions for you that will help in exactly what you are interested in doing.

If you would like to send a notification to all the members of a shared folder you can do the following:

1. Log in to your Dropbox account at https://www.dropbox.com/

2. Select the Events tab and select the shared folder from the left side of the window

3. Scroll all the way to the bottom of the page and you will see a text field that says 'Add a comment to recent events feed'

This will allow you to leave a comment to all the members of the shared folder. This will also send the members an email with this comment.

If you would like to leave a shared folder without the other members of the folder to lose the information you can log in to your Dropbox account, select the Sharing tab and click Options next to the folder you would like to leave. From here, it will give you the option to leave the shared folder. Once you choose to leave the shared folder, it will prompt you to either leave the the files on the other users' accounts. This will allow you to simply leave the folder and not affect anyone else.

You can also rejoin the folder at any time by clicking the 'Show past shared folders' within the Sharing tab in your Dropbox account.

For more information on unsharing a folder, please visit our help center link here:

Monday, November 15, 2010

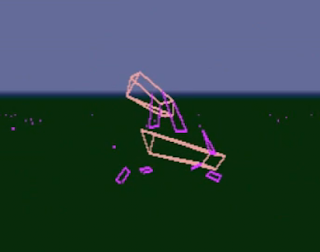

Reviving an old project : "Digging Engine"

I found back an old project that allows you to dig holes everywhere. At the end of the day if you made holes everywhere, the caves start to look really cool. The whole level in which I am flying is made by the user itself and not a level that I loaded. You can create miles and miles of caves withouth slowing-down the framerate of the engine. It was meant to be multi-players...

I wrote this digging engine a while back : in 1995. It was made for an artistic project of Maurice Benayoun : the Tunnel under the Atlantic, between Paris and Montreal.

Lots of new cool things could be done nowdays. Sounds like a good plan !

But I need to start by rewriting everything from scratch...

Tuesday, October 12, 2010

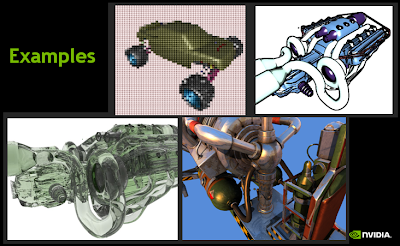

GTC talks

Thursday, April 22, 2010

Modifying Sony Webbie MP4 creation date for iMovie to be happy

I recently had issue with my Camcorder that messed-up with Movies creation dates.

The Sony Webbie Mpeg Camcorder is cute but really messy. I don't advise you to acquire it although it is a rather cheap device.

The main problem is that the time of this camcorder doesn't move forward when the device is Off (!!). So, everytime I switched-On the camcorder, the time always started from where I originally set it in the options…

The consequences are not so obvious when using any sort of movie editor that is capable of sorting original movies by name. But when using iMovie, this time-stamp issue starts to be really annoying because iMovie is only able to organize events according the creation time (this is again amazing to see how Apple is reluctant to expose features "in the name of simplicity").

A simple workaround: gather all the files in alphabetic order and incrementally modify their creation time.

I tried Automator with no success : I couldn't find any command to modify the creation date.

Bash shell script turned out to be the most convenient way. After I found few things from here

http://www.ibm.com/developerworks/library/l-bash.html

I wrote this script that can increment the creation-date according to the order in which the files are processed (alphabetically):

s=10

m=10

for f in ./*

do

echo "SetFile -d 04/17/10\ 02:${m}:${s} $f"

SetFile -d 04/17/10\ 02:${m}:${s} $f

s=$(($s+1))

if [ "$s" = 60 ]

then

m=$(($m+1))

s=10

fi

done

Notes:

- The reason why I didn't use touch : can only change the modification and access dates

- SetFile command is part of the toolkit of XCode

Friday, April 2, 2010

Monday, March 15, 2010

Looking for animation technology in GDC...

Sunday, March 7, 2010

My 1990's hand made co-processor for my Amiga

Yet another prehistoric thing I took out from my boxes...

1991's vector Amiga 500 demo

I just discovered that someone posted a video capture of an old demo I wrote in 1991.

I just discovered that someone posted a video capture of an old demo I wrote in 1991. Wednesday, March 3, 2010

Mixing NVIDIA Technologies thanks to CgFX

I recently found very interesting to Push the limits of NVIDIA CgFX runtime.

I recently found very interesting to Push the limits of NVIDIA CgFX runtime.- Interfaces : very useful in order to have an abstraction layer between what we want to compute and how it will be computed. For example, lighting can be done through this :lights are exposed in the shader code as interfaces. The implementation (spot, point, directional...) of these lights is hidden and decoupled from the shader that is using this interface.

- Unsized arrays of parameters and/or interfaces : a good example of using this features is to store the light interfaces in this array. The shader doesn't have to know how many lights are in this array. The array doesn't have to care about the implementation of the lights it holds. So the array is a good way to store an undefined amount of lights of various type

- Custom States : states are used in the Passes of the Techniques. CgFX allows to customize states and to create new states. You can easily define special states; states that have no meaning in D3D or OpenGL, but have a meaning for what you need to do in the pass with your application. These states are a perfect way to tell the application what to do in the passes. For example, you can create state "projection_mode" that would allow the effect to tell the application to switch from perspective to ortho projection... and by the way, regular OpenGL & D3D states in Cg are also custom states, but they are shipped and implemented by Cg runtime :-)

- Semantics : very simple feature... but this allowed me to simulate handles of objects (more details later below)

- A hairy API... but giving you lots of ways to dig into the loaded effect. People often complained about how Cg API is bad looking, or is too complex. I somehow agree. But my recent experience showed that this API in fact offers a lot of interesting ways to do what you need. As usual, there is no magic : flexibility often leads to complex API.

- the first layer is the common way : provide effects for each material. For example an effect would be available for some Plastic shading, for objects that need a plastic look... etc.

- A second layer of effects acting on scene level : prior to rendering any object, why not use the Technique/Pass paradigm to tell the application how it need to process the scene ?

- Pass 1: render the Opaque Objects in a specific render target; let's also store the camera distance of each pixel in another render target (a texture)

- Pass 2: render the transparent Objects in another render target (a texture); but let's keep the depth buffer of opaque objects so that we get a consistent result

- Pass 3: perform a fullscreen quad operation that will read the opaque and transparent textures and do the compositing. This operation will allow to fake some refraction on transparent triangles thanks to a bump-map, for example

- Pass 4: use the camera distance stored earlier to perform another pass that will blur the scene according to some Depth of field equations

- the scene-level pass will use an annotation to tell underlying effects which interface should be used to generate the pixels. Meaning that a special interface was defined and that material effects have to use this interface rather than simply write fragments by itself.

- the material effects will receive the correct implementation of "pixel output" interface, so that all the render targets get properly updated. Here, the material effect has no knowledge of what finally will be written in the output: it only provides all the possible required data. The interface that the scene-level did choose will do this job...

- the scene-level effect will then contain a simple integer parameters where the name will represent the texture target and its semantic will explicitly tell the application that this integer parameter is a texture resource

- This integer will contain annotations. One annotation for example will tell that the format of the texture is RGA32F; another annotation will give the width and heigh of the texture

- Optix ray tracing (to give the web link...)

- CUDA (to give the web link...)

- Declare references to PTX code that we will need to send to Optix

- declare an Optix context

- associate the references of PTX code to various Optix layers

- declare arbitrary variables with default values that Optix will gather and send to the PTX code (some ray tracing code would for example need any sort of fine-tuning parameters for precision or recursive limits...)

- Declare the resources that Optix needs : input resources (pixel informations needed to start the ray tracing); output resource where to write the final image of inter-reflections; intermediate buffers if needed...

- tell a CgFX pass that it needs to trigger the OptiX rendering, rather than doing as usual a simple OpenGL/D3D scene graph rendering

- Pass 1: render the scene with OpenGL : render 3 targets : colors, normals and world-space position of each fragment (pixel)

- Pass 2: trigger OptiX rendering. the starting point of this rendering are the pixels of the previous render targets : given the camera position, it is possible to compute the reflection... the final reflection colors are then stored in a texture

- Pass 3: read the texture from previous OptiX pass and merge it with the Pass 1 RGBA color

- Pass 1: trigger the OptiX rendering. The RGBA scene result will be stored in a texture

- Pass 2: draw the texture with a fullscreen quad

- The 3D scene is mirrored within Optix Environment (and hashed into 'acceleration structures', according to some fancy rules). This necessary redundancy can be tricky and takes some memory in Video Memory...

- The shaders that are written in Cg should somehow be also writtent in CUDA code for Optix.

- CUDA doesn't provide as good texture sampling as what does a pixel shader : the Mipmaping isn't available, neither are cubemaps...

- Many other concerns.

- simple Glow post-processing

- convolution post-processing

- Bokeh filter (accurate depth of field)

- deferred shading (for example, check GDC about deferred shading using Compute...)

- etc.

Monday, March 1, 2010

Calderon Dolphin Slaughter in Denmark

This poor dolphins are stabbed a number of times, but as if that weren't enough, they bleed to death, probably in excruciating pain while the whole town watches.

- do these people need to act like this in order to maintain their local way of life and industry ? This could be possible. Maybe the local economy requires fishing these cetaceans. But I keep being amazed that such a massive Slaughter be really needed.

- we should check on how things are really happening theses days because I had no way to know when these pictures were taken

Hello Honey,

yes, this is sad!

But I think there is something wrong with the message.

Faroe Island is an autonomous province of Denmark.

I think this island is nearer Norway, and Norway is known for disagreeing to the international agreement against whaling.

This island is far away from all of the other continents.

Yes, it’s bad, that they are hunting whales and kill them in that way, but I think you must have a look to the whole situation of that island.

They live from the whales and fish, they are hunting and they have no other industry.

Another thing is, that you didn’t know how old these photos are…

warm regards

Wednesday, February 3, 2010

"The Cove" : this movie should be packaged with Seaworld trips!

Before going to Seaworld, I already planned to watch "The Cove" ( http://www.thecovemovie.com/home.htm ).

This movie shows how wicked is the Japanese government & some Lobbies toward Dolphins (and Whales...). In fact,the rest of the world is responsible, too (US, Europe...) : we all started-up this business and now we close our eyes on consequences. Japanese fishermen see it as a way to make money and we can't blame them so much on this. However I do blame the fact we let them do so and even help them...

A good thing to know that this Slaughter still happens in Japan. Disgusting and true.

Thursday, January 28, 2010

Quick trip to San Diego for the kids: Seaworld

Tuesday, January 12, 2010

Memory

in 1879, Abbe Moigno created a fancy way to keep >99 decimals of PI number in mind : by creating a small text where each syllable (sound) means a number.

The rest is essentially in French and I won't try to make the same for other language. But you get the picture. I am pretty sure you can do it ;-)

3,14159265358979323846264338327950288419...

will lead to the following :

Maints terriers de lapins ne gèlent = 3 1 4 1 5 9 2 6 5

Ma loi veut bien, combats mieux, ne méfaits! = 3 5 8 9 7 9 3 2 3 8

Riants jeunes gens, remuez moins vos mines 4 6 2 64 3 3 8 3 2

Qu'un bon lacet nous fit voir à deux pas=7 9 5 0 2 8 8 4 1 9

This technique is based of the following rule (again, I do it French-minded... 'Ne', 'Te'...) :

- C/'Se'/'sss' = 0;

- 'Te'/'De' = 1;

- 'Ne' = 2;

- 'Me' =3;

- 'Re'=4;

- 'Le'=5;

- 'Che'/'Je'=6;

- 'Ke'=7;

- 'Fe'=8;

- 'Pe'=9

"Sot, tu nous ments, rends le champ que fit Pan"

Monday, January 11, 2010

This Sunday, Arthur (13 months) really showed me he does understand everything we tell him (in French).